2>&1 --> why no longer stderr

In the pre-container world, stdout and stderr were essential parts of the Unix philosophy. Standard output was meant for normal program output, while standard error was designated for error messages and diagnostics. This separation made a lot of sense when applications ran directly on hosts, with logs going into rotating files managed by syslog, logrotate, or custom scripts. Operations teams relied on keeping stderr clean to catch actual issues in production environments. When your error logs got noisy, you missed the important stuff.

But containers changed everything. In environments like Docker and Kubernetes, both stdout and stderr are captured by the container runtime and pushed to logging drivers or sidecars. The distinction between streams has become less relevant. What matters now is not which stream a message comes from, but what's inside the log. The new rule is simple: structure over stream.

Today, logs are part of the application logic. Developers define log levels (debug, info, warn, error, fatal) directly in code, using structured formats (usually JSON). These levels aren't tied to Linux or syslog anymore. Instead, they follow application-centric logging standards interpreted by platforms like Sentry, Datadog, or the ELK stack. The logging pipeline has evolved from simple files to complex distributed systems.

Here's a quick Python example using the logging library:

python

import logging

import json

import sys

class JsonFormatter(logging.Formatter):

def format(self, record):

log_record = {

"timestamp": self.formatTime(record),

"level": record.levelname,

"message": record.getMessage(),

"service": "api-service",

"logger": record.name,

"path": f"{record.pathname}:{record.lineno}"

}

if record.exc_info:

log_record["exception"] = self.formatException(record.exc_info)

return json.dumps(log_record)

# Configure logger

logger = logging.getLogger("app")

logger.setLevel(logging.DEBUG)

# Send all logs to stdout - not stderr even for errors

handler = logging.StreamHandler(sys.stdout)

handler.setFormatter(JsonFormatter())

logger.addHandler(handler)

# Application logs

logger.info("Service started")

logger.error("Connection failed", exc_info=True)

The frontend world is even more disconnected from traditional Unix streams. In browser environments, stdout and stderr don't even exist, yet logging remains critical:

import * as Sentry from "@sentry/browser";

Sentry.init({

dsn: "your-dsn-url",

environment: "production"

});

// Log information

Sentry.captureMessage("User loaded profile", "info");

// Log errors

try {

fetchUserData();

} catch (error) {

Sentry.captureException(error);

}

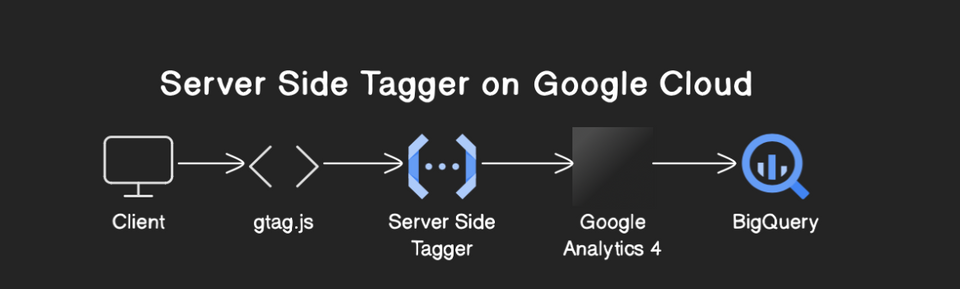

Modern logging systems ingest logs from containers regardless of their origin stream. Tools like Fluent Bit, Fluentd, early versions of Grafana Loki, Datadog, Sentry, and the ELK Stack treat logs as structured data, not just text. They parse fields like level, timestamp, service name, and metadata (request IDs, user IDs, etc.). The platform's ability to categorize, index, and alert is based on log content and structure, not the originating stream.

Stream separation was designed for local log file management. Containerization has unified log capture, with both streams typically directed to the same collection pipeline.

Log levels and structures are now defined in application code, not at the OS level. Whether a message represents information or an error is a semantic decision made in code, not determined by which Unix stream it uses.

For most containerized applications, the best practice is to output all logs to stdout in a structured format, letting the container runtime and log aggregation tools handle collection, processing, and alerting based on the log's content rather than its stream. The old separation is becoming a relic of the past as we move toward more sophisticated observability solutions.