Monitoring and siblings

A quick attempt to explain core differences. Will take datadog as a sample to show some psuedocode implementations.

Monitoring: "Whats happening currently?" using dashboards and reports based on metrics (what we measure goes here.)

ex. all requests are currently processed within 2ms.

Custom metrics in Datadog, python:

from datadog import statsd

# Track request processing time

start_time = time.time()

# ... process request ...

duration = (time.time() - start_time) * 1000 # Convert to ms

statsd.histogram('api.request.duration', duration, tags=['endpoint:/payments', 'method:POST'])

Alerting: "Something interesting happened, and I've an action to do."

ex. 20 requests in last 1 minute took more than 10ms.. I'm increasing timeout for new requests and alerting a human.

Yaml file for datadog monitor:

name: "High API Response Time"

type: "metric alert"

query: "avg(last_1m):avg:trace.servlet.request.duration{service:payment-api} > 0.01"

message: |

@slack-alerts

Payment API response time is {{value}}ms, exceeding 10ms threshold.

Runbook: https://wiki.company.com/payment-api-latency

options:

thresholds:

warning: 0.008

critical: 0.01

notify_no_data: true

evaluation_delay: 60

Logging: "Everything that an application does, and takes a note of. This can be discreet events, errors, unknown behaviours from softwares."

ex. I'm processing a request to /payments/sendMoney from a google pixel 3 with Android Oreo OS from Bangalore.

An example of a structured json logging. This allows us to add log correlation based on dd.trace_id and dd.span_id; And also use things like @user_agent, @geo.city, @http.url_details.path etc. for filters.

{

"timestamp": "2025-05-27T10:30:45Z",

"level": "INFO",

"message": "Processing payment request",

"service": "payment-api",

"version": "1.2.3",

"dd": {

"trace_id": "1234567890123456789",

"span_id": "987654321"

},

"http": {

"method": "POST",

"url": "/payments/sendMoney",

"status_code": 200

},

"user": {

"id": "user123",

"device": "Google Pixel 3",

"os": "Android Oreo"

},

"geo": {

"city": "Bangalore",

"country": "India"

},

"custom": {

"payment_amount": 1000,

"currency": "INR"

}

}

Tracing: "A single users' (or a small sample set) journey across the software (it can be part/whole of multiple softwares in a larger system too).. this is more to identify how is user flow and how is it impacted"

ex. A user went to home page, entered the system, went till the payment page but our payment service never received a request for this user.

from ddtrace import tracer

@tracer.wrap("payment.process", service="payment-api")

def process_payment(user_id, amount):

with tracer.trace("payment.validate", service="payment-api") as span:

span.set_tag("user.id", user_id)

span.set_tag("payment.amount", amount)

# validation logic

with tracer.trace("payment.external_call", service="payment-gateway") as span:

# call to external payment provider

span.set_tag("provider", "stripe")

Observability: "Why's something happening?" -> it can be answered with help of logs+traces+monitoring, by asking system any question with any kind of combination.

ex. Why're only 20 requests taking 10ms while others are at 2ms? What are these 20 requests?

Datadog query like below can tell more details. Ofcourse, observability can happen by a bunch of dashboards, richer queries, correlations, etc.

-- Example: Datadog Trace Analytics Query

-- Find traces with high duration grouped by endpoint

SELECT

count(*) as request_count,

avg(@duration) as avg_duration,

@http.url_details.path as endpoint

FROM traces

WHERE @duration > 0.01

AND service:payment-api

GROUP BY @http.url_details.path

ORDER BY avg_duration DESC

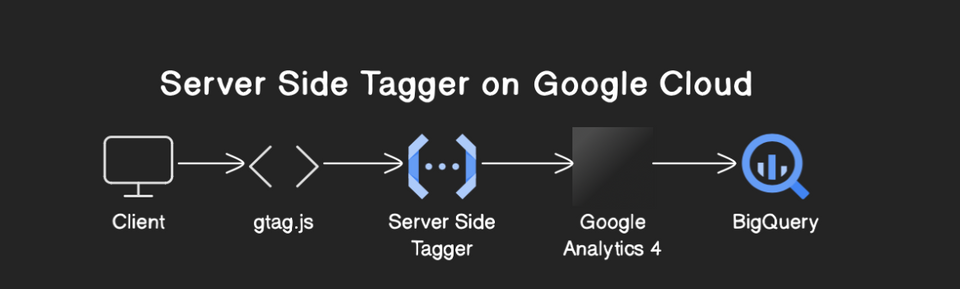

P.S.: I highly recommend you all to head to https://namc.in/posts/cloud-observability/ for further reading. Namrata took it a step ahead to show the implementation details in a kubernetes cluster.