Crispy Cron

A meditation on unnecessary computation, design patterns, and the evolving nature of "good" architecture

Somewhere in a data centre, a cron job dutifully awakens every minute, checks a database for new records, finds none, logs "No work to do," and goes back to sleep. With 10x of services around 1000x of companies, you've got quite a symphony or a noise of computation.

It always brings me back to a fundamental question in software design: When should code execute?

Like a security guard making rounds in an empty building, or a chef preheating an oven for a meal that might never come—there's something deeply unsettling about computational effort that discovers its own pointlessness. Yet here we are, feeding the machine of digital Sisyphus, one empty queue check at a time.

The Polling Problem

Traditional system design was built around polling—the digital equivalent of repeatedly asking "Are we there yet?" Consider the classic email client that checks for new messages every 5 minutes, or the background job processor that scans a queue every 30 seconds. These patterns were perfectly tuned for the times where:

- Unreliable network connections and lower internet speed.

- Communications were hard, and notifications were costly to implement.

- Server resources were precious but predictable

- Latency was in minutes, not seconds.

But polling is inherently wasteful. If you check for new orders every minute and orders arrive every hour, you're executing 59 pointless operations for every meaningful one. That's a 98.3% waste ratio—a number that would make any performance engineer weep.

It's like having a taxi driver circle the block looking for fares instead of waiting at the taxi stand. Functionally sound, economically questionable, philosophically maddening.

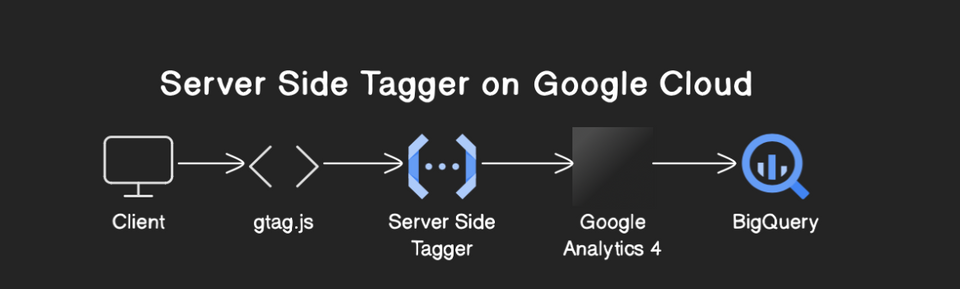

Event-Driven approach

The antidote to polling madness is event-driven architecture. Instead of constantly checking if something happened, you wait for notifications that something has happened. Message queues, webhooks, database triggers, and event streams all follow this philosophy: reactive execution over proactive checking.

// A continuous running task or a cron job running every 1 minute.

tasks = database.get_tasks_to_run_now()

if tasks are empty:

log("no tasks to run;")

else:

process_tasks(tasks)

// The enlightened path: Zen-like patience

task_queue.subscribe(task -> {

process_task(task) // Only when there's actually work

})

Of course, event-driven systems are efficient. Code doesn't run until it needs to. There are no unnecessary database queries, no log lines with nothing meaningful to offer. But is it an anti-pattern? It might not be anti.

Sometimes the taxi driver should keep circling to find a ride.

The Serverless Paradox

Enter serverless computing, and suddenly our carefully constructed rules start bending like reality in a physics experiment gone wrong. In a world where you pay per execution and functions spin up from cold starts, the old polling patterns seem absurd. Why would you run a Lambda function every minute to check if there's work when you could use EventBridge or SQS triggers?

Yet polling persists, even thrives, in serverless architectures.

Simplicity trumps efficiency.Sometimes a 5-line cron job is easier to understand, deploy, and debug than a complex event-driven system spanning multiple services. When compute costs are measured in fractions of pennies, the economic argument for optimisation weakens considerably.

External systems don't always cooperate. That third-party API you're integrating with might not offer webhooks—it's stuck in 2015, architecturally speaking. Your legacy database might not support triggers without a complete overhaul. Sometimes, polling is the only dance partner available at this particular party.

Timing guarantees matter. Event-driven systems are eventually consistent by nature, which is philosophy-speak for "it'll happen when it happens." If you need something to occur at most every 5 minutes, polling provides stronger guarantees than hoping events arrive punctually.

Design Patterns in the Wild

The evolution of acceptable patterns reveals something more profound about software architecture: context is king.

The Heartbeat Patternemerged from monitoring needs, like a digital drummer keeping time in a jazz ensemble. Services that poll their own health and report status aren't wasting cycles—they're providing crucial operational visibility. A service that goes quiet might be dead, overloaded, or network-partitioned. Regular heartbeats distinguish between "no work" and "no service." In distributed systems, silence is suspicious.

The Reconciliation Pattern comes from distributed systems theory and the hard-earned wisdom that entropy creeps into everything. Even with perfect event-driven architecture, networks partition, messages get lost, and cosmic rays flip bits. Periodic reconciliation jobs that scan for inconsistencies aren't a waste—they're insurance against the fundamental unreliability of distributed computing. These jobs often find nothing wrong, and that's not failure; that's success.

The Batch Processing Pattern recognises that sometimes efficiency comes from accumulation, like carpooling for data. Instead of processing one record at a time as events arrive, you might deliberately introduce a delay to process records in batches. Here, "unnecessary" execution becomes a design choice trading latency for throughput—those empty queue checks are intermissions between performances.

Waste Resources?

What's waste? It depends on what you optimise for the most.

- CPU cycles: Polling is wasteful, events are efficient

- Developer time: Polling is simple, events are complex

- Latency: Events win decisively

- Reliability: Polling provides predictability

- Cost: Depends entirely on your pricing model

- Debuggability: Polling is linear and traceable, like breadcrumbs in a fairy tale

As cloud economics evolved, some traditional "waste" became acceptable or even preferable. When AWS charges you $0.0000002 per Lambda request, burning a few thousand pointless executions costs less than the engineer-hours to build a perfectly efficient event system. It's the difference between optimising for theoretical purity and optimising for pragmatic reality.

Anti-Patterns That Aren't

The most delicious aspect of this evolution is watching former anti-patterns undergo complete rehabilitation, like fashion trends that cycle from embarrassing to vintage to cutting-edge:

Database Polling was once the computational equivalent of wearing socks with sandals—technically functional but socially unacceptable. Now it's a legitimate pattern for serverless applications that need to react to database changes without complex trigger infrastructure.

Fixed Interval Processing was inefficient when servers ran 24/7, like keeping a restaurant open around the clock. In a world where containers spin up on demand, having predictable execution windows can actually improve resource utilisation—it's the difference between always-on and appointment-based service.

Duplicate work transformed from waste to fault tolerance through the magic of reframing. Running the same job on multiple instances and letting them coordinate through database locks is sometimes simpler than building perfect work distribution. It's distributed systems democracy—may the best process win.

The Wisdom of When

So, when should code execute when there's nothing to execute? The answer isn't binary—it's contextual, like most interesting questions in software engineering:

Execute unnecessary code when:

- The cost is negligible compared to alternatives

- Simplicity provides operational benefits that compound over time

- You need timing guarantees in an uncertain world

- External constraints force your architectural hand

- The "waste" serves a secondary purpose (monitoring, reconciliation, proof of life)

Avoid unnecessary execution when:

- Resources are genuinely constrained, and every cycle counts

- The waste is orders of magnitude larger than the useful work

- Better alternatives are available and actually maintainable

- The pattern creates cascading inefficiencies that propagate through your system

Embracing Pragmatic Inefficiency

Perhaps the real insight isn't about eliminating unnecessary execution—it's about being intentional about it. Chasing efficiency isn't the goal of the best systems; it's rather adaptability. The system should optimise for the right constraints in the proper context.

A cron job that runs every minute and finds nothing to do isn't necessarily a broken design—it might be computational honesty. It could be a conscious choice to prioritise simplicity over efficiency, or reliability over optimisation. The difference between thoughtful pragmatism and accidental waste is what separates elegant systems from bureaucratic nightmares.

As our tools evolve, so too must our definitions of good design. What was once an anti-pattern might become best practice when the underlying assumptions shift beneath our feet. Like architectural styles changing based on available materials—when steel became cheap, we built differently.

To wear a philosophical hat for a moment:

optimise for what actually matters, not what feels pure.

That cron job checks an empty queue every minute? Maybe it's not computational nihilism after all. It could be code being honest about the messy, pragmatic world it operates in—a lighthouse keeper scanning empty seas, making most nights quiet so that the few eventful nights remain manageable.

And sometimes, that's exactly what we need.