Code to Culture

How a seemingly simple AWS region migration revealed the hidden architecture of organizational culture

The Call

The email landed in my inbox on a Tuesday morning, deceptively simple in its request: "Need help migrating our infrastructure from us-east-1 to ap-south-1 due to Indian financial data regulations. Everything's in Terraform. Should be straightforward."

What seemed like a technical migration would become a six-month journey into the heart of organizational culture, where every misplaced resource and tangled dependency told a story about the people who created them.

The Archaeological Dig Begins

When I first cloned their infrastructure repository, I thought my Git had broken. A single main.tf file stretched across 3,847 lines. No modules. No organization. Just raw Terraform resources cascading down like a digital waterfall of infrastructure declarations.

# Line 1: VPC

resource "aws_vpc" "main" { ... }

# Line 47: Random RDS instance

resource "aws_rds_instance" "analytics_db" { ... }

# Line 156: Another VPC (wait, what?)

resource "aws_vpc" "legacy_vpc" { ... }

# Line 203: Kubernetes cluster

resource "aws_eks_cluster" "prod" { ... }

But the lack of structure wasn't what caught my attention—the stories hidden in the resource names and configurations were. legacy_vpc living alongside main_vpc. Database instances with names like temp_analytics_backup_final_v2. Security groups with descriptions like "Added by John for the payment service - temp fix."

Each resource was a fossil, preserving a moment in the company's evolution. Like any good archaeologist, I began to piece together the civilization behind these digital artifacts.

The Kubernetes Revelation

Buried within the Terraform monolith, I discovered something even worse: Kubernetes YAML files. A handful of them. Embedded directly in the infrastructure repository, some with hardcoded values, others with environment variables referencing AWS resources may or may not exist depending on which team had last run terraform apply.

# pod-payment-service.yaml

apiVersion: v1

kind: Pod

metadata:

name: payment-service

spec:

containers:

- name: payment

image: payment-service:latest

env:

- name: DB_HOST

value: "prod-db.us-east-1.rds.amazonaws.com" # TODO: Make dynamic

- name: SECRET_KEY

value: "sk-1234567890abcdef" # FIX: Move to secrets

The comments told the real story. "TODO: Make dynamic" had been sitting there for 18 months. "FIX: Move to secrets" was authored by someone who had left the company a year ago. These weren't just technical artifacts—they were organizational scars, evidence of good intentions never finding the time or priority to be properly addressed.

The Multi-Team Archaeology

As I dug deeper into the Git history, a pattern emerged. The repository's structure—or lack thereof—was a perfect mirror of the organization's communication patterns.

The commits revealed the truth:

- Frontend Team: Pushed directly to main with commit messages like "Updated k8s deployment for new feature"

- Backend Team: Created resources in

main.tfwith names likebackend_service_db_v3_final - DevOps Team: Tried to organize things with comments like "# TODO: Refactor section"

- Security Team: Added patches with names like

security_group_emergency_fix

Each team worked in isolation, adding their pieces to the infrastructure puzzle without seeing the bigger picture. The result was a digital Tower of Babel—a system reflecting the fragmented communication patterns of the organization itself.

State Drift: The Organizational Entropy

The Terraform state file told its own story of organizational dysfunction. Resources existing in AWS but not in the configuration. Configuration declaring resources manually deleted months ago. The state drift wasn't just a technical problem—entropy made visible, the inevitable result of multiple teams making changes without coordination.

Running terraform plan became an exercise in digital archaeology:

Plan: 27 to add, 15 to change, 8 to destroy.

But which changes were intentional? Which resources had been manually modified in the AWS console during a late-night incident? Which security groups had been tweaked by well-meaning engineers trying to solve immediate problems?

The infrastructure had become a palimpsest—layers of changes written over previous changes, each team's modifications obscuring the work of others.

The Six-Month Cultural Transformation

What started as a region migration became something much larger: a systematic transformation of how the organization thought about infrastructure, ownership, and collaboration.

Month 1-2: The Archaeological Phase

We began by mapping the existing system, not just the technical dependencies but the human ones. Who owned what? Which teams communicated? Where were the organizational boundaries reflected in the infrastructure boundaries?

I introduced the concept of "infrastructure as autobiography"—the idea their current system was a perfect record of their organizational evolution. Every duplicated resource, every hardcoded value, every temporary fix becoming permanent told a story about deadlines, priorities, and communication patterns.

Month 3-4: The Modularization Revolution

The technical work of creating Terraform modules became an exercise in organizational design. Each module forced conversations about ownership, interfaces, and responsibilities never happening before.

# Before: Monolithic chaos

resource "aws_rds_instance" "payment_db" {

# 47 lines of configuration

# Mixed with application logic

# No clear ownership

}

# After: Clear module boundaries

module "payment_database" {

source = "../modules/database"

team_owner = "payments"

environment = var.environment

backup_retention = 30

# Clear interface, explicit ownership

}

But the real magic wasn't in the code—in the conversations these modules forced. Suddenly, teams had to talk to each other about interfaces, dependencies, and shared responsibilities.

Month 5-6: The Organizational Transformation

As we rebuilt the infrastructure with proper modules, clear ownership, and explicit dependencies, something remarkable happened: the organization's communication patterns began to change too.

Teams started holding cross-functional planning sessions. The DevOps team became facilitators rather than gatekeepers. Security reviews became collaborative design sessions rather than post-hoc audits.

The infrastructure repository transformed from a chaotic dumping ground into a well-organized reflection of the new organizational structure:

├── modules/

│ ├── networking/ # Owned by Platform Team

│ ├── databases/ # Owned by Data Team

│ ├── kubernetes/ # Owned by DevOps Team

│ └── security/ # Owned by Security Team

├── environments/

│ ├── production/

│ ├── staging/

│ └── development/

└── applications/

├── payment-service/

├── user-service/

└── analytics-service/

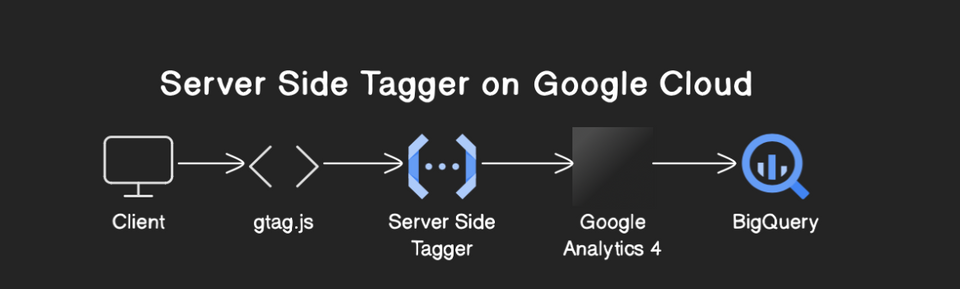

The CI/CD Renaissance

With the new modular structure came the opportunity to rebuild their CI/CD pipeline from scratch. But again, the technical changes drove organizational ones.

The old system had been a single pipeline anyone could trigger, resulting in a tragedy of the commons where no one felt responsible for failures. The new system introduced the concept of "pipeline ownership"—each module and application had its own pipeline, owned and maintained by the responsible team.

# .github/workflows/database-module.yml

name: Database Module Pipeline

on:

push:

paths: ['modules/databases/**']

jobs:

test:

runs-on: ubuntu-latest

steps:

- name: Validate Terraform

- name: Run Security Scans

- name: Integration Tests

notify:

needs: [test]

steps:

- name: Notify Database Team

# Only the database team gets notifications

It wasn't just about technical efficiency—about creating accountability and ownership. Teams began to take pride in their pipeline success rates. Green builds became a shared goal rather than someone else's responsibility.

The Migration: A Reflection of Transformation

By the time we were ready for the actual migration from us-east-1 to ap-south-1, the technical work had become almost anticlimactic. The real transformation had already happened in the organization's DNA.

The migration itself was executed with military precision:

- Phase 1: Spin up new infrastructure in ap-south-1 using our battle-tested modules

- Phase 2: Migrate data with zero-downtime techniques

- Phase 3: Gradually shift traffic using weighted DNS routing

- Phase 4: Decommission us-east-1 resources

Each phase was owned by the relevant team, executed using their well-tested pipelines, with clear rollback procedures and communication protocols.

The Lasting Impact: Architecture Driving Culture

Six months after the first email, I realized we had witnessed something fascinating. Instead of the organization's communication structure determining the system architecture, we had used system architecture to transform the organization's communication structure.

The modular infrastructure had forced the creation of clear team boundaries and interfaces. The pipeline ownership had created accountability and pride. The explicit dependencies had necessitated cross-team collaboration.

Lessons Learned: Infrastructure as Cultural Artifact

The experience taught me infrastructure repositories are never just technical artifacts—they're cultural autobiographies. Every resource, every configuration, every commit tells a story about the people and processes creating them.

Key insights from the journey:

1. Start with Archaeology, Not Architecture

Before designing the future state, understand the current state—not just technically, but organizationally. Why does the infrastructure look the way it does? What decisions, constraints, and pressures shaped its evolution?

2. Architecture Can Reshape Communication

While organizations typically design systems mirroring their communication structure, thoughtful system design can also reshape organizational communication patterns.

3. Infrastructure Refactoring is Change Management

Every module you create, every dependency you make explicit, every ownership boundary you establish will require organizational change. Plan for it, facilitate it, and measure it.

4. Technical Debt is Organizational Debt

The hardcoded value in the YAML file isn't just a technical problem—evidence of communication failures, priority misalignments, and process gaps. Fix the organization, and the technical debt becomes much easier to address.

Tech debt should be paid in installments. Not all at once.

Also, there's good tech debt and bad tech debt. More on it in some other blogpost.

5. CI/CD is Culture

Your deployment pipeline reflects your organizational values. Do you value speed over safety? Individual heroics over team collaboration? The pipeline will tell the truth, even when your mission statement doesn't.

The Epilogue: A Repository Transformed

Today, their infrastructure repository stands as a testament to organizational transformation. Clean modules with clear ownership. Comprehensive CI/CD pipelines with team accountability. Documentation actually maintained because people take pride in their work.

But more importantly, the teams communicate differently. They plan together. They own their failures and celebrate their successes collectively. They've learned infrastructure isn't just about servers and networks—about people and processes.

The migration to ap-south-1 was completed successfully, meeting all regulatory requirements and improving latency for their Indian customers. But the real success was the transformation of the organization itself—from a collection of siloed teams to a cohesive engineering culture.

As I packed up my laptop after the final handover meeting, I reflected on how organizations design systems mirroring their own communication structure. What's often missed is sometimes, the reverse is also true—thoughtful system design can reshape organizations themselves.

The infrastructure repository once a chaotic mess of tangled dependencies and unclear ownership had become a blueprint for organizational excellence. In the transformation, I had witnessed something beautiful: the power of technical work to drive cultural change, one module at a time.

This blog post is based on a real client engagement. Technical details have been modified to protect client confidentiality, but the organizational insights and transformation patterns are authentic.