Centralised logging

Logs from various components in a distributed systems need to come together at a central place where users can view the logs and do any kind of debugging, observation, extract information flow, etc.

Anatomy of a log:

What does a log mean? How does it flow from application to somewhere along the stack?

Usual process:

Applications write logs to STDOUT, typically in a pre-defined format. A process constantly writes logs from stdout to a file. The file is continuously read by another process and the logs are sent to a different location.

App writes logs to stdout --> process writes logs to a file --> an agent or another process captures logs --> sends to a central server (load balancer) --> central server processes logs --> sends data to database.

Log Structure and Components

A typical log entry contains several key components:

Timestamp: When the event occurred (usually in ISO 8601 format like 2018-03-02T16:26:45.123Z)

Log Level: The severity or importance of the message

Logger Name/Source: Which component or module generated the log

Message: The actual log content describing what happened

Context/Metadata: Additional structured data like user IDs, request IDs, trace IDs, etc.

Example log entry:

2018-02-02T16:26:45.123Z INFO [user-service] User login successful userId=12345 requestId=abc-def-123 duration=45ms

Log Levels Hierarchy

Log levels follow a standard hierarchy from most to least severe:

FATAL/CRITICAL: System is unusable, immediate action required

- Application crashes, system failures, critical security breaches

- Example:

FATAL Database connection pool exhausted, shutting down

ERROR: Error conditions that don't stop the application but need attention - Failed requests, caught exceptions, validation failures

- Example:

ERROR Failed to process payment for user 12345: Invalid credit card

WARN: Potentially harmful situations or unexpected conditions - Deprecated API usage, configuration issues, retries

- Example:

WARN API rate limit approaching threshold (80% of limit reached)

INFO: General informational messages about application flow - User actions, business logic events, system state changes

- Example:

INFO User 12345 successfully placed order #67890

DEBUG: Detailed information for diagnosing problems - Variable values, method entry/exit, internal state

- Example:

DEBUG Processing order validation: items=3, total=$45.99

TRACE: Very detailed information, typically used for following execution paths - Fine-grained debugging, performance profiling

- Example:

TRACE Entering calculateTax() with amount=45.99, region=CA

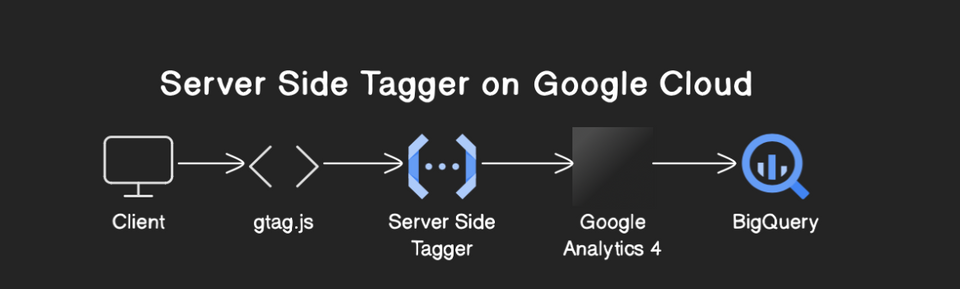

Log Flow Architecture

The complete log flow in modern distributed systems typically follows this pattern:

1. Application Layer

- Applications use logging frameworks (Logback, Log4j, Winston, etc.)

- Logs are written to STDOUT/STDERR or directly to files

- Structured logging (JSON format) is preferred for machine readability

2. Collection Layer

- Log Agents: Fluentd, Filebeat, Vector, or custom agents

- Sidecar Pattern: Agent runs alongside application container

- Host-based: Single agent per host collecting from multiple applications

3. Processing Layer

- Parsing: Extract structured data from raw log text

- Enrichment: Add metadata like hostname, environment, service version

- Filtering: Remove sensitive data or noise

- Routing: Send different log types to different destinations

4. Transport Layer

- Message Queues: Kafka, RabbitMQ for reliable delivery

- Direct Streaming: HTTP/gRPC endpoints for real-time ingestion

- Buffering: Handle traffic spikes and network issues

5. Storage Layer

- Time-series databases: Elasticsearch, ClickHouse for searchable logs

- Object storage: S3, GCS for long-term archival

- Data lakes: For analytics and compliance requirements

6. Analysis Layer

- Search and Query: Kibana, Grafana for log exploration

- Alerting: Automated notifications based on log patterns

- Dashboards: Visual representation of log metrics and trends

Best Practices

Structured Logging: Use consistent JSON format with standard fields across all services

Correlation IDs: Include trace/request IDs to follow requests across services

Contextual Information: Add relevant metadata without logging sensitive data

Appropriate Log Levels: Use levels consistently across your organization

Performance Consideration: Use asynchronous logging to avoid blocking application threads

Log Retention: Implement appropriate retention policies for cost and compliance

Sampling: For high-volume applications, consider sampling to reduce storage costs

Common Logging Patterns

Request/Response Logging: Log incoming requests and outgoing responses with timing

Error Context: Include stack traces and relevant state when logging errors

Business Events: Log important business actions for audit trails

Performance Metrics: Log timing information for critical operations

Security Events: Log authentication, authorization, and security-related events

This comprehensive logging strategy enables effective monitoring, debugging, and observability in distributed systems while maintaining performance and managing costs.

Centralized Logging

Centralized logging is the practice of collecting, aggregating, and storing logs from all components of a distributed system in a single, unified location. This approach is essential for modern applications that span multiple services, containers, and infrastructure components.

Why Centralized Logging?

Unified View: Instead of SSH-ing into individual servers or containers to check logs, operators can search and analyze logs from all services in one place

Correlation: Easily trace requests across multiple microservices using correlation IDs and timestamps

Scalability: Handle log volume from hundreds or thousands of service instances without manual intervention

Persistence: Logs survive container restarts, node failures, and deployments

Security: Centralized access control and audit trails for log access

Popular Centralized Logging Solutions

ELK Stack (Elasticsearch, Logstash, Kibana)

- Elasticsearch for storage and search

- Logstash for processing and transformation

- Kibana for visualization and dashboards

EFK Stack (Elasticsearch, Fluentd, Kibana)

- Fluentd replaces Logstash for more flexible log collection

- Better performance for high-volume scenarios

Cloud-native Solutions

- AWS CloudWatch Logs

- Google Cloud Logging

- Azure Monitor Logs

Modern Alternatives

- Grafana Loki (designed for cloud-native environments)

- Splunk (enterprise-focused)

- Datadog, New Relic (SaaS observability platforms)

Implementation Considerations

Log Shipping Strategy: Choose between agent-based (Filebeat, Fluentd) vs. direct application shipping

Data Retention: Balance storage costs with compliance and debugging needs

Search Performance: Design indices and partitioning strategies for efficient queries

High Availability: Ensure logging infrastructure doesn't become a single point of failure

Cost Management: Implement log sampling, filtering, and tiered storage for cost optimization

Security: Encrypt logs in transit and at rest, implement proper access controls

Centralized logging transforms distributed system observability from a complex, manual process into an automated, searchable, and actionable system that enables rapid troubleshooting and deep system insights.