Blue green deployment

Blue-Green is a software deployment strategy that solves a particular problem: Previous version is still available until new version is completely validated.

How does it work:

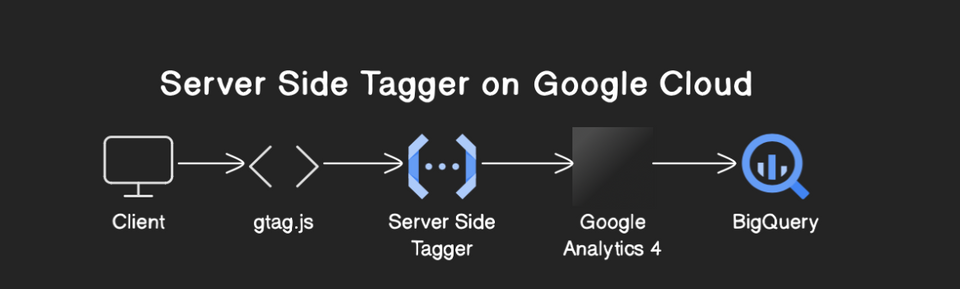

Let's say we've to deploy our software. It's a typical 2-tier architecture, with a load balancer and set of servers behind it.

For every new version, we'll create a new set of servers, attach them to same load balancer. The traffic is currently going to both versions of the software. Once we validate that current version is working, we mark the old set for termination.

In AWS terminology:

- Create a new AMI for every new version of our software.

- Create a launch template version (or a launch configuration), with above AMI id.

- Create an auto-scaling group with new template we just created.

- Attach this auto-scaling group to load balancer.

- Wait for 10 minutes (up to us!) to make sure everything is working fine with new auto-scaling group.

- Terminate the older auto-scaling group to zero, and delete it.

Terminology:

- New version set is "green"

- Current/older version set is "blue"

- Immutable -> The piece/set of infra never changed. It's only created or destroyed.

Canary:

- A slightly varied strategy of blue green deployment is while we're waiting to validate new version, we do only a small number of servers.

For ex: if we've 100 servers of blue currently, we deploy 20 servers of green, validate the deployment.. add 20 more, validate deployment..repeat until 100.

How to implement?:

- The above strategies can be implemented with several tools including but not limited to Terraform, Ansible/Chef, Code Deploy, python scripting, bash scripting, etc.

Why not replace load balancer every time?

- Load balancer change means we've to inform all the services which are calling our software. Technically, we can do it with DNS and other sophisticated mechanisms. While this is inefficient, this works.

- Other major problem: Load balancers are nothing but some servers running certain code.With scale, AWS would increase more servers to handle the traffic. So, if our average scale is 100 req/sec and it'll go upto 100k req/sec over a period of week, AWS understands this pattern and scales up load balancer (behind the scenes, a set of servers) appropriately.

Creating a new load balancer everytime would mean AWS has no estimate of the amount of load it needs to handle. So, if the new Load balancer starts handling 100 req/sec, and we get 50k req/sec, most of our requests end up with 5xx errors.

Note: If we're creating a new load balancer and expecting the initial traffic itself to be significantly high, we can send AWS a pre-warm request via a support ticket and they'd scale up their load balancer servers as necessary.